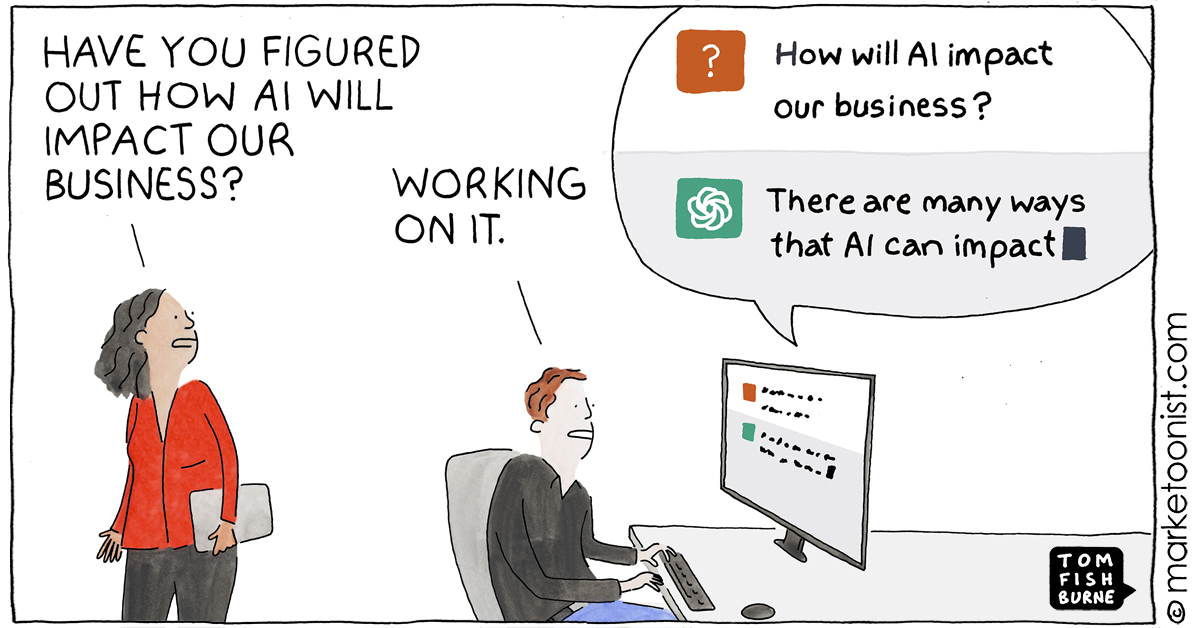

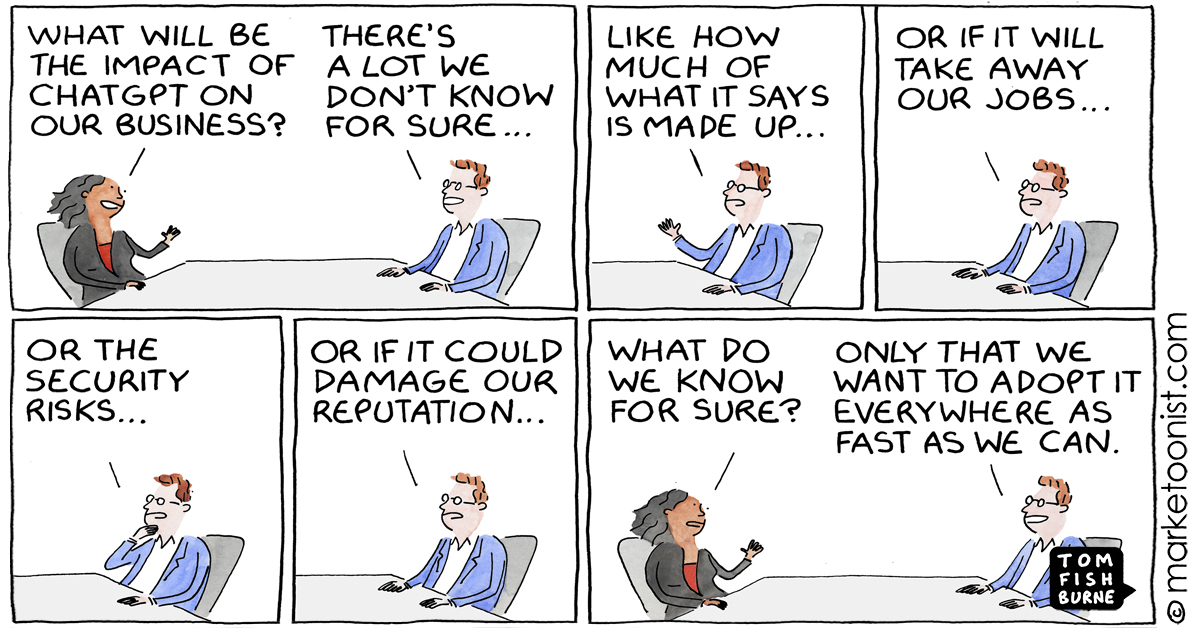

In adopting generative AI, organizations are simultaneously pushing the accelerator to the floor while trying to work on the engine at the same time. This urgency without clarity is a recipe for missteps.

A nonprofit eating disorder organization called NEDA found this out recently after replacing a 6-person helpline team and 20 volunteers with a chatbot named Tessa..

A week later, NEDA had to disable Tessa when the chatbot was recorded giving harmful advice that could make eating disorders worse.

Once a ta digital transformation summit hosted by Procter & GambleO one of their attorneys talked about the challenge of balancing urgency with safeguards in a time of digital transformation. She shared a model that stuck with me about providing “freedom within a framework.”

BCG Chief AI Ethics Officer Steven Mills recently advocated for a “freedom within a framework” type of approach for AI. As he put it:

“It’s important folks get a chance to interact with these technologies and use them; stopping experimentation is not the answer. AI is going to be developed across an organization by employees whether you know about it or not…

“Rather than trying to pretend it won’t happen, let’s put in place a quick set of guidelines that lets your employees know where the guardrails are … and actively encourage responsible innovations and responsible experimentation.”

One of the safeguards that Salesforce suggests is “human-in-the-loop” workflows. Two architects of Salesforce’s Ethical AI Practice, Kathy Baxter and Yoav Schlesinger, put it this way:

“Just because something can be automated doesn’t mean it should be. Generative AI tools aren’t always capable of understanding emotional or business context, or knowing when you’re wrong or damaging.

“Humans need to be involved to review outputs for accuracy, suss out bias, and ensure models are operating as intended. More broadly, generative AI should be seen as a way to augment human capabilities and empower communities, not replace or displace them.”